Feature Engineering That Really Moves RMSE

How to slice the noise out of tabular data and serve your model the purest signal.

Published: Jul 17, 2025

You train a dozen models, grid‑search every hyper‑parameter, and still stare at an root mean squared error (RMSE) that won’t budge. When the features are weak, the model can only guess.

Poorly engineered features hide the true shape of the data. They make gradient boosts wander, leave linear models half‑blind, and burn GPU hours on noise. Your competition score stalls and your confidence dips.

Shift the effort from algorithm hunting to feature crafting. This guide walks through four moves — skew fixing, domain ratios, safe target encoding, and leak‑proof pipelines — using Kaggle’s House Prices data. Each move is tiny; together they can drop RMSE significantly.

Like practical ML guides that respect your time? Follow me on X (@CoffeyFirst).

Get Familiar with the Raw Data

The Ames dataset ships 1,460 historical sales and 79 raw columns — numbers, categories, and a sprinkling of missing values.

import pandas as pd

train = pd.read_csv("train.csv").drop("Id", axis=1)

print(train.shape) # (1460, 80)First Checks

- Data types — verify which integers are actually categoricals (

MSSubClass). - Missing values — surface counts early; plan imputations.

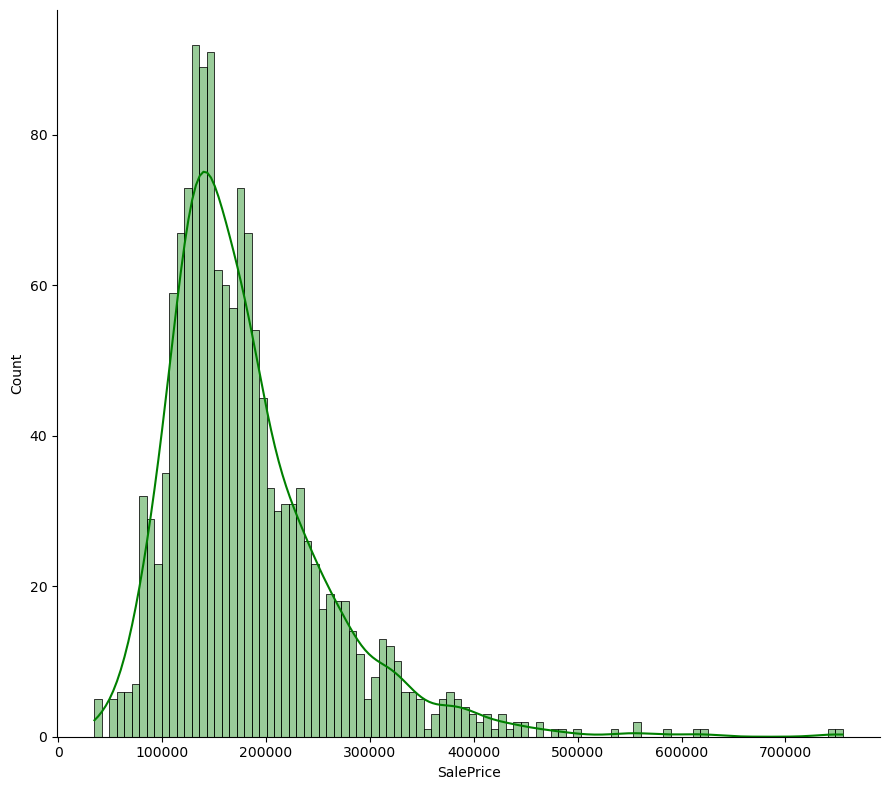

- Target log — plot

SalePrice; note the right‑skew tail.

Tame Skew with Box‑Cox and Log Transforms

Why Skew Hurts

Right‑skewed features inflate variance, bias coefficients, and slow tree splits. A quick df.hist() shows heavy tails in LotArea, GrLivArea, and even the target.

Three‑Step Fix

Transform the target first so evaluation is fair.

import numpy as np

y = np.log1p(train["SalePrice"])Quantify feature skew

from scipy.stats import skew

num_cols = train.select_dtypes(include=["int64","float64"]).columns

skewed = train[num_cols].apply(lambda s: skew(s.dropna())).abs()

skewed = skewed[skewed > 0.75]Apply Box‑Cox

from scipy.stats import boxcox

for col in skewed.index:

train[col], _ = boxcox(train[col] + 1)Like practical ML guides that respect your time? Follow me on X (@CoffeyFirst).

Craft Domain‑Driven Ratios & Interactions

The Principle

Raw counts rarely capture value density. Real‑estate appraisers think in price per square foot, age of remodel, quality × size — not in isolated columns.

Five Features That Matter

New Feature Formula Intuition

- TotalSF:

GrLivArea + TotalBsmtSF

Total usable floor area - PricePerSF:

exp(SalePrice) / TotalSF

Bang for the buck - Qual_SF:

OverallQual * TotalSF

Big + well‑built homes sell higher - Age:

YrSold — YearBuilt

Older homes often discount - RemodelAge:

YrSold — YearRemodAdd

Recent remodel bumps value

Add these to your training frame:

train["TotalSF"] = train["GrLivArea"] + train["TotalBsmtSF"]

train["PricePerSF"] = np.exp(y) / train["TotalSF"]

train["Qual_SF"] = train["OverallQual"] * train["TotalSF"]

train["Age"] = train["YrSold"] - train["YearBuilt"]

train["RemodelAge"] = train["YrSold"] - train["YearRemodAdd"]These single lines often outrank entire blocks of one‑hot categories in importance.

Like practical ML guides that respect your time? Follow me on X (@CoffeyFirst).

Unlock High‑Cardinality Categories with Safe Target Encoding

Neighborhood, Exterior1st, SaleType — too many levels for neat one‑hot vectors. Target encoding maps each category to the mean (or smoothed mean) of the target.

Danger: Leakage

If you compute the mean on all rows, each observation learns from its own label — instant overfit.

K‑Fold Encoding in Practice

from category_encoders.target_encoder import TargetEncoder

from sklearn.model_selection import KFold

kf = KFold(n_splits=5, shuffle=True, random_state=42)

train["Neighborhood_TE"] = 0.0

for tr, val in kf.split(train):

enc = TargetEncoder(cols=["Neighborhood"], smoothing=0.3)

enc.fit(train.iloc[tr], y.iloc[tr])

train.loc[val, "Neighborhood_TE"] = enc.transform(train.iloc[val])["Neighborhood"]Now every fold’s row sees stats built without its own price.

Guard Against Leakage Everywhere

Leakage sneaks in beyond encoding:

- Global statistics — Median price per year if computed on the full set.

- Date math with test rows — Using

YrSoldto compute age on both train and unseen test before splitting. - Filling missing values with whole‑set means.

Rule: All transforms must live inside the pipeline that is fit on training folds only.

Like practical ML guides that respect your time? Follow me on X (@CoffeyFirst).

Assemble a Leak‑Proof Pipeline

from sklearn.pipeline import Pipeline

from sklearn.compose import ColumnTransformer

from sklearn.preprocessing import StandardScaler, OneHotEncoder

from sklearn.ensemble import GradientBoostingRegressor

num = ["TotalSF", "Qual_SF", "Age", "RemodelAge", "LotArea"]

cat = ["Neighborhood_TE"] # already numeric, keep simple

pre = ColumnTransformer([

("num", StandardScaler(), num),

("cat", "passthrough", cat),

])

pipe = Pipeline([

("prep", pre),

("model", GradientBoostingRegressor(random_state=42)),

])Cross‑validate:

from sklearn.model_selection import cross_val_score

rmse = ( -cross_val_score(pipe, train[num+cat], y, cv=5, scoring="neg_root_mean_squared_error") ).mean()

print(f"CV RMSE: {rmse:.4f}")Key Takeaways

- Fix skew early — log target, Box‑Cox features.

- Inject domain ratios — total area, quality × size, price per sqft.

- Use K‑fold target encoding for rich categories; dodge leakage.

- Build every transform inside a pipeline; never touch test data in prep.

- Measure RMSE after each tweak so you learn which steps move the needle.

Like practical ML guides that respect your time? Follow me on X (@CoffeyFirst).